|

I am currently a Senior ML-Engineer at Apple Inc., where I am involved in improving, scaling and optimizing of Voice Trigger System for Siri across all Apple Devices. Previously, I was a researcher/computer-scientist at Stanford Research Institute (SRI) International, where I worked on cross-disciplinary machine learning & deep learning applications. At SRI Intl. I've worked on Model Quantization/Pruning (SRI spin-off LatentAI), AMD and DASL. I completed my Masters at Univ. of Minnesota, Twin Cities and was a part of Prof. Mingyi H. research group. Email / Bio / Google Scholar |

|

|

|

|

I'm interested in applied research on Model Quantization/Pruning, Foundation Model optimizations, and on-device ML. I delve much into cross-disciplinary projects. |

|

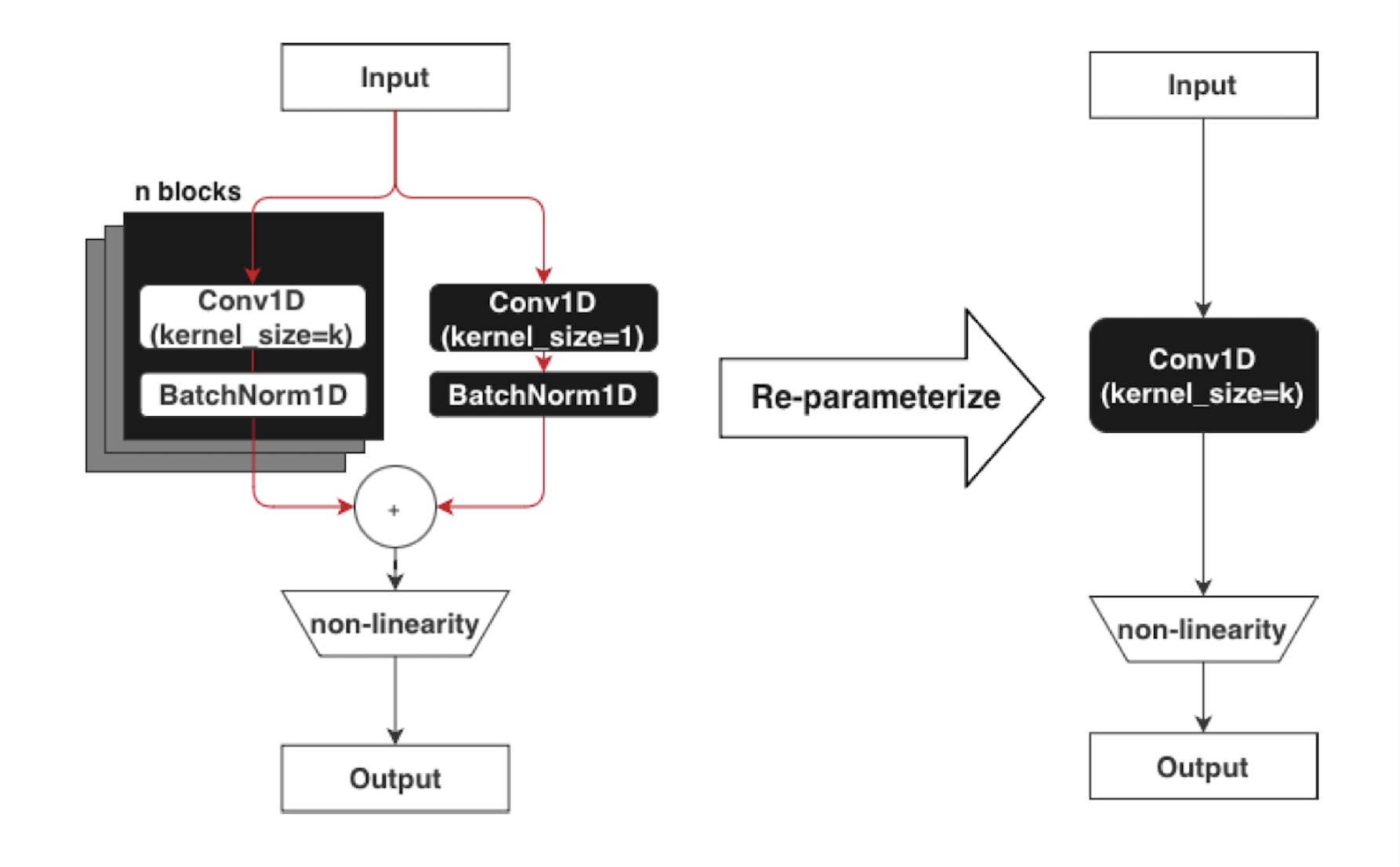

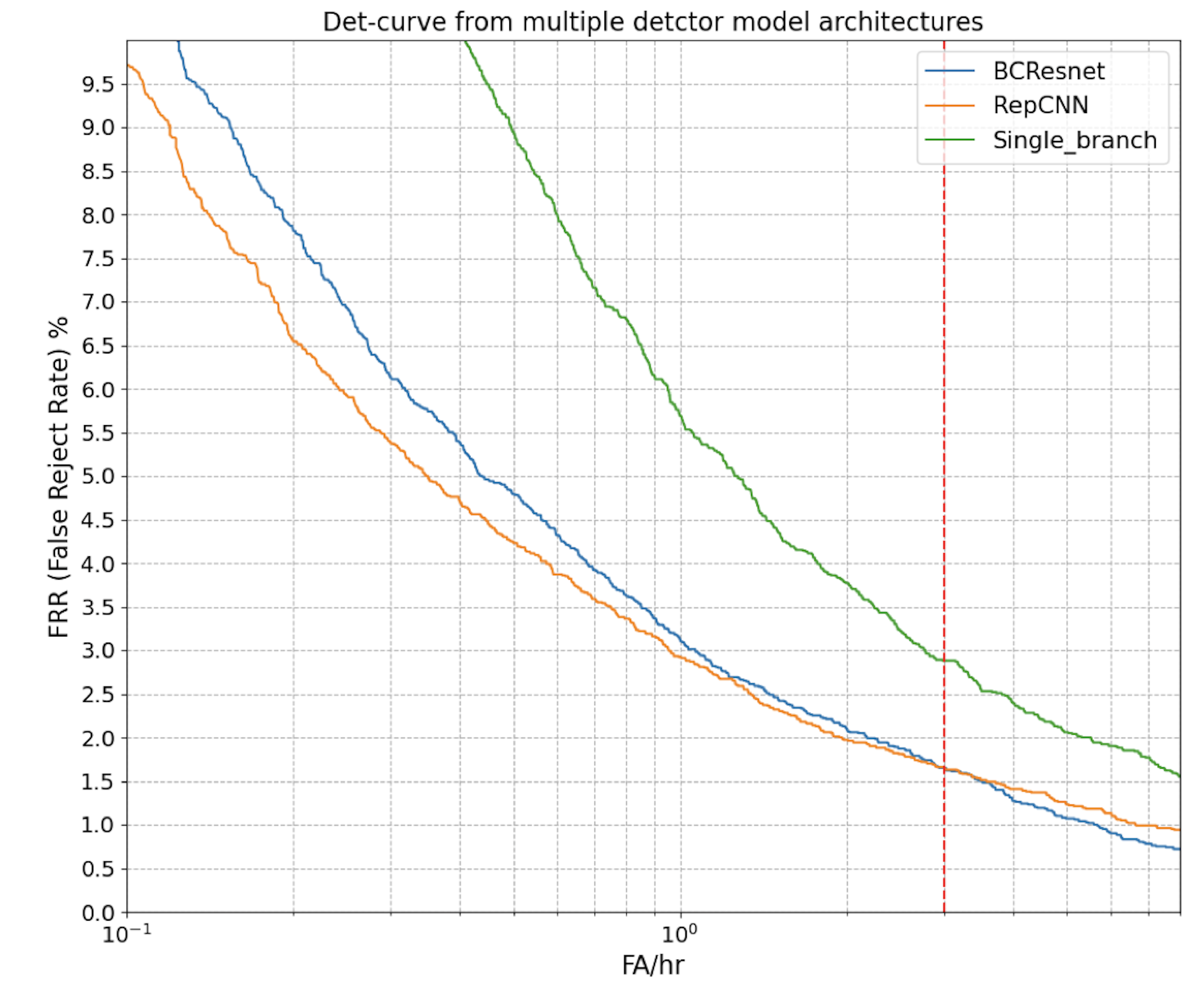

Prateeth Nayak, Arnav Kundu, et. al., arXiv, InterSpeech 2024 We introduce a new architecture and approach to training a model for keyword detection for very low compute and memory hardware. This involves the trick of over-parameterizing the model during training and re-parameterizing during inference resulting in 2x lesser peak memory usage 10x faster runtime on-device. |

|

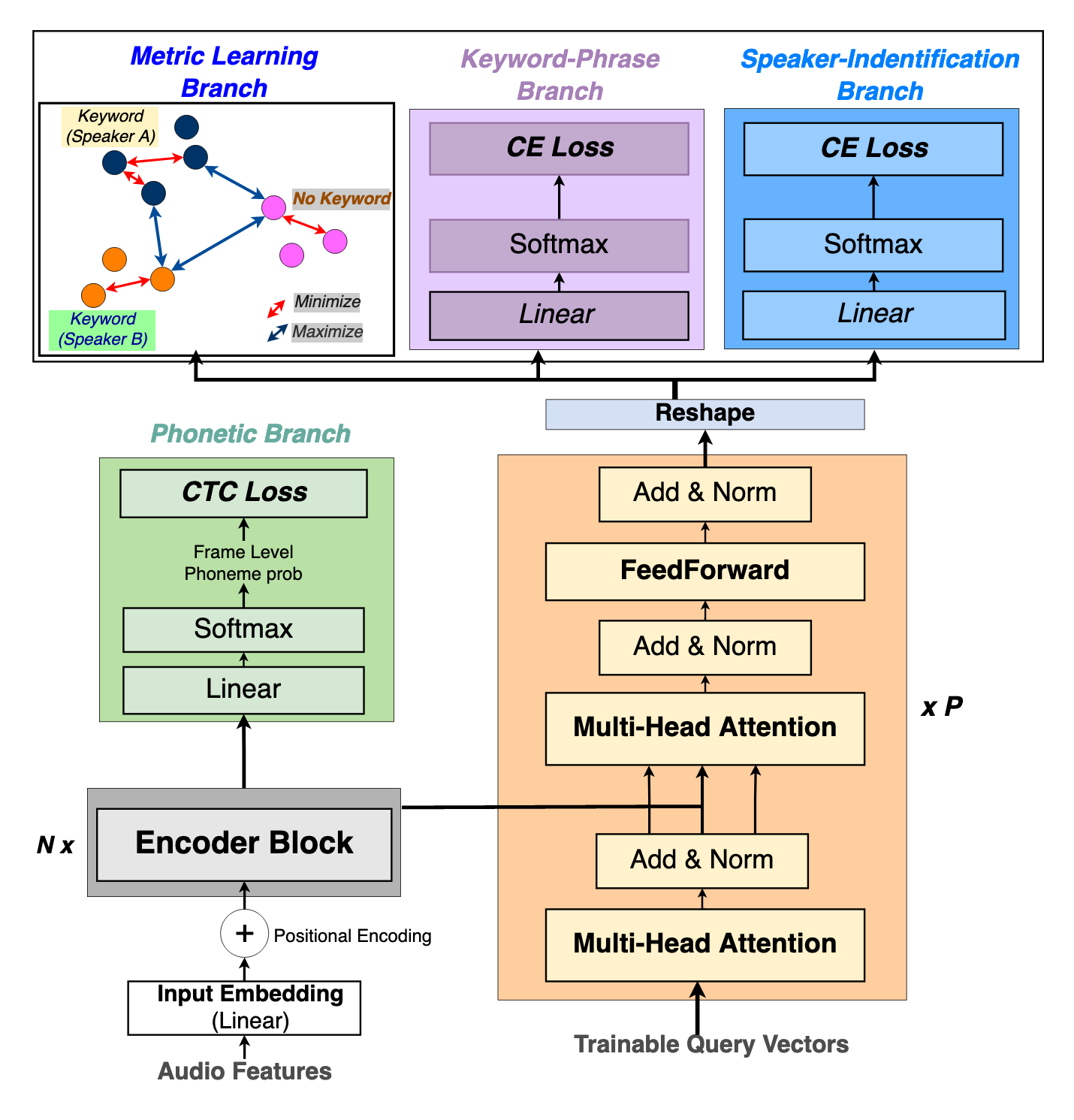

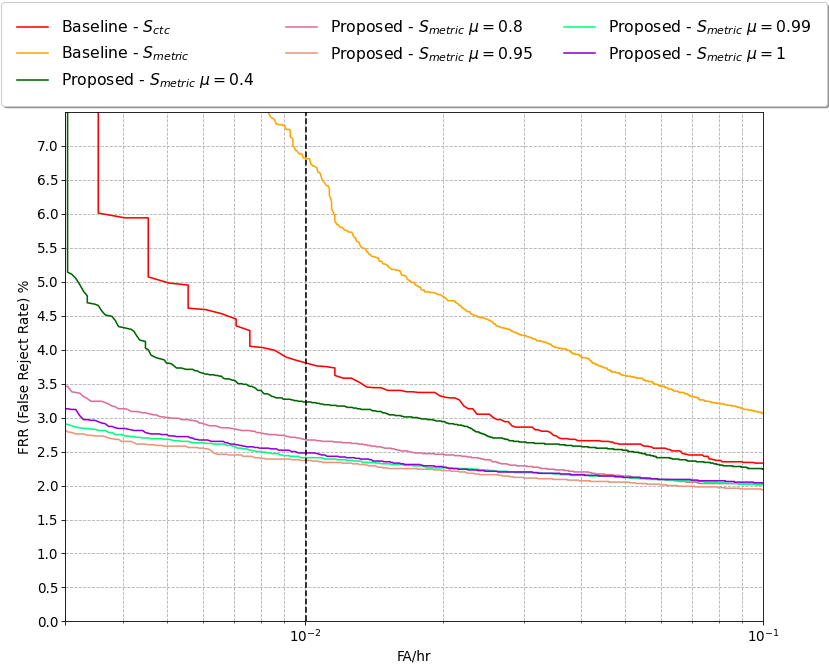

Prateeth Nayak, Takuya Higuchi, et. al., arXiv, InterSpeech 2022 We improve the voice trigger detection performance of smartphone assistant using small number of utterances from target speaker in a multi-task learning framework to output a personalized embedding for each utterance. |

|

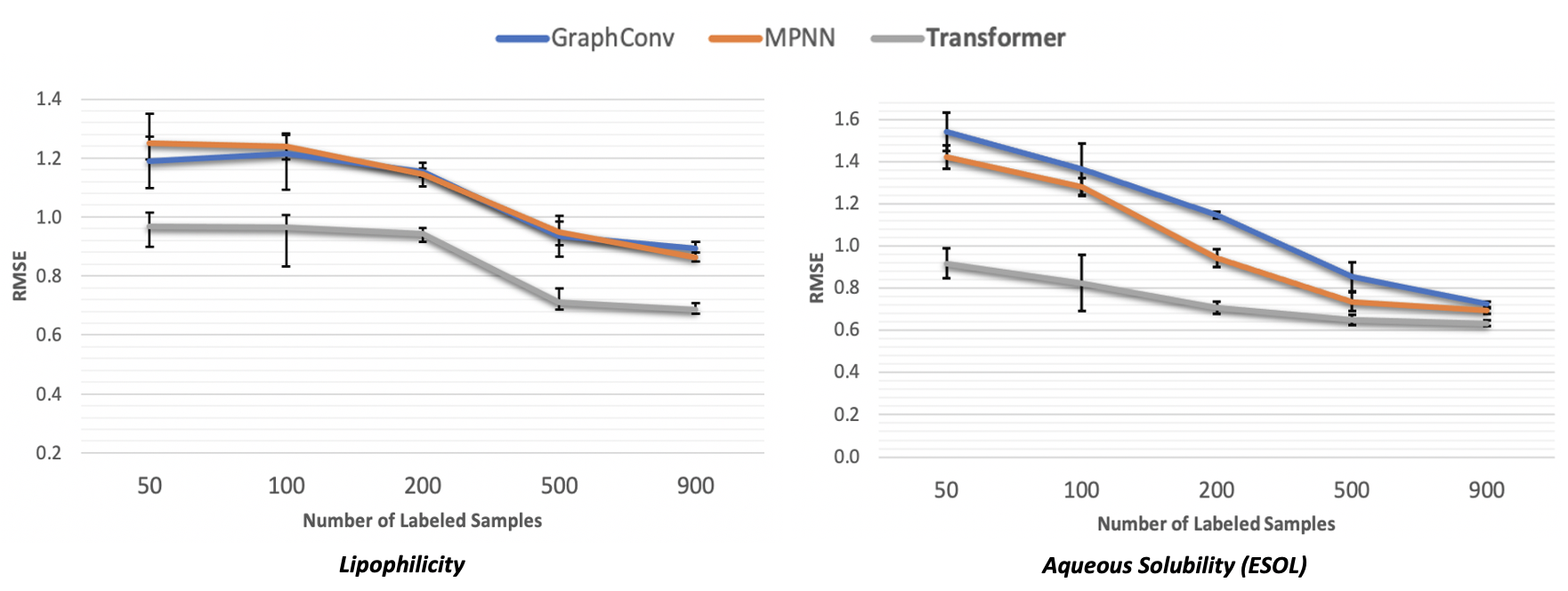

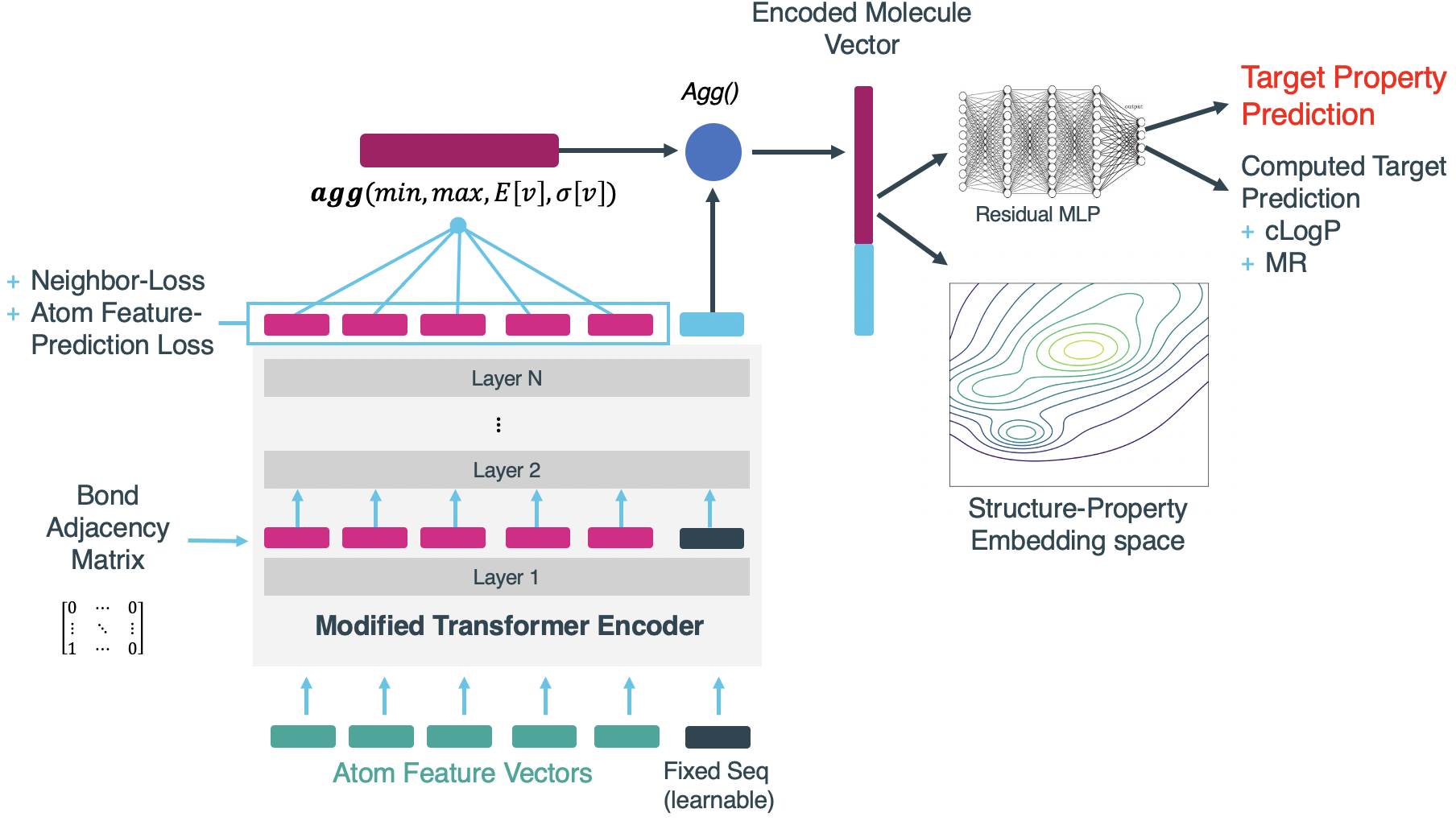

Prateeth Nayak, Andrew Silberfarb, Ran Chen, Tulay Muezzinoglu, John Byrnes, ml4Molecules @ NeruIPs, 2020 We introduce a modified Transformer based architecture to take in atom-level feature vectors as inputs and aim to encode a a better molecule representation space which helps effectively include the structure-property relations of a molecule. |

|

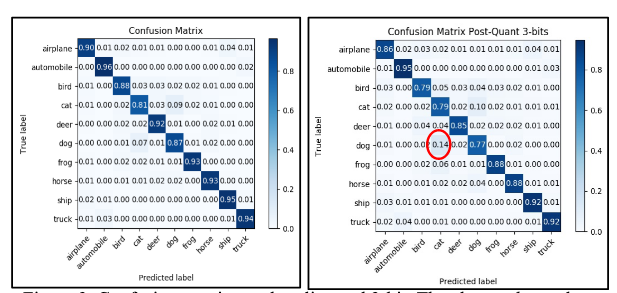

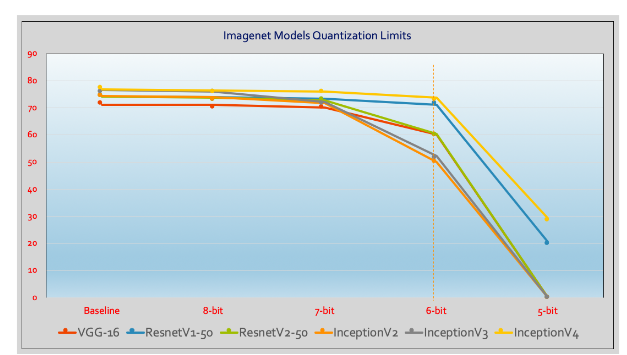

Prateeth Nayak David Zhang, Sek Chai, EMC^2 @ NeurIPs, 2019 We present the comparison of data-free post-training quantization methods for state-of-art models trained on datasets like ImageNet. To better analyze quantization results, we describe the overall range and local sparsity of valuesafforded through various quantization schemes. |

|

|

Outside of work ...